Browse Facebook, and you wouldn’t expect Facebook’s advertisers to learn who you are. After all, Facebook’s privacy policy and blog posts promise not to share user data with advertisers except when users grant specific permission. For example, on April 6, 2010 Facebook’s Barry Schnitt promised: “We don’t share your information with advertisers unless you tell us to (e.g. to get a sample, hear more, or enter a contest). Any assertion to the contrary is false. Period.”

My findings are exactly the contrary: Merely clicking an advertiser’s ad reveals to the advertiser the user’s Facebook username or user ID. With default privacy settings, the advertiser can then see almost all of a user’s activity on Facebook, including name, photos, friends, and more.

In this article, I show examples of Facebook’s data leaks. I compare these leaks to Facebook’s privacy promises, and I point out that Facebook has been on notice of this problem for at least eight months. I conclude with specific suggestions for Facebook to fix this problem and prevent its reoccurrence.

Facebook’s data leak is straightforward: Consider a user who clicks a Facebook advertisement while viewing her own Facebook profile, or while viewing a page linked from her profile (e.g. a friend’s profile or a photo). Upon such a click, Facebook provides the advertiser with the user’s Facebook username or user ID.

Facebook leaks usernames and user IDs to advertisers because Facebook embeds usernames and user IDs in URLs which are passed to advertisers through the HTTP Referer header. For example, my Facebook profile URL is http://www.facebook.com/bedelman. Notice my username (yellow).

Of course, it would be incorrect to assume that a person looking at a given profile is in fact the owner of that profile. A request for a given profile might reflect that user looking at her own profile, but it might instead be some other user looking at the user’s profile. However, when a user views her own profile page, Facebook automatically embeds a “profile” tag (green) in the URL:

http://www.facebook.com/bedelman?ref=profile

Furthermore, when a user clicks from her profile page to another page, the resulting URL still bears the user’s own user ID or username, along with the details of the later-requested page. For example, when I view a friend’s profile, the resulting URL is as shown below. Notice the continued reference to my username (yellow) and the fact that this is indeed my profile (green), along with an appendage naming the user whose page I am now viewing (blue).

http://www.facebook.com/bedelman?ref=profile#!/pacoles

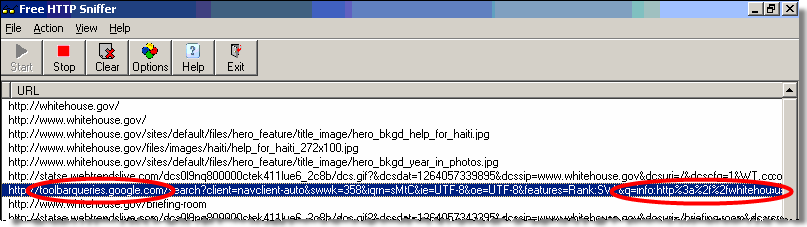

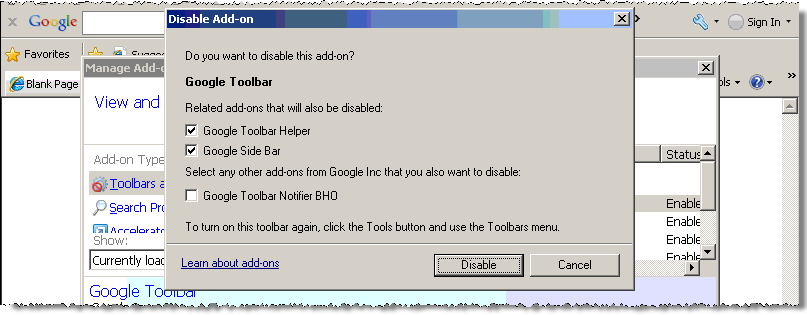

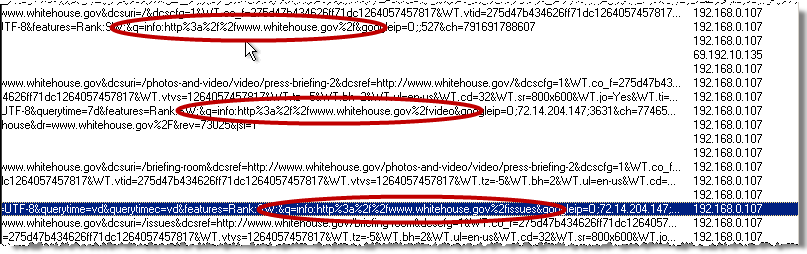

Each of these URLs is passed to advertisers whenever a user clicks an ad on Facebook. For example, when I clicked a Livingsocial ad on my own profile page, Facebook redirected me to the advertiser, yielding the following traffic to the advertiser’s server. Notice the transmission in the Referer header (red) of my username (yellow) and the fact that I was viewing my own profile page (green).

GET /deals/socialads_reflector?do_not_redirect=1&preferred_city=152&ref=AUTO_LOWE_Deals_ 1273608790_uniq_bt1_b100_oci123_gM_a21-99 HTTP/1.1

Accept: */*

Referer: http://www.facebook.com/bedelman?ref=profile

…

Host: livingsocial.com

…

The same transmission occurs when a user clicks from her profile page to a friend’s page. For example, I clicked through to a friend’s profile, http://www.facebook.com/bedelman?ref=profile#!/pacoles, where I clicked another Livingsocial ad. Again, Facebook’s redirect caused my browser to transmit in its Referer header (red) my username (yellow), the fact that that username reflects my personal profile (green). Interestingly, my friend’s username was omitted from the transmission because it occurred after a pound sign, causing it to be automatically removed from Referer transmission.

GET /deals/socialads_reflector?do_not_redirect=1&preferred_city=152&ref=AUTO_LOWE_Deals_ 1273608790_uniq_bt1_b100_oci123_gM_a21-99 HTTP/1.1

Accept: */*

Referer: http://www.facebook.com/bedelman?ref=profile

…

Host: livingsocial.com

…

In further testing, I confirmed that the same transmission occurs when a user clicks from her profile page to a photo page, or to any of various other pages linked form a user’s profile.

With a Facebook member’s username or user ID, current Facebook defaults allow an advertiser (and anyone else) to obtain a user’s name, gender, other profile data, picture, friends, networks, wall posts, photos, and likes. Furthermore, the advertiser already knows the user’s basic demographics, since the advertiser knows the user fits the profile the advertiser had requested from Facebook. For example, in grey highlighting above, the advertiser learned from Facebook my age, gender, and geographic location.

Facebook’s Contrary Statements about User Privacy vis-a-vis Advertisers

Facebook has made specific promises as to what information it will share with advertisers. For one, Facebook’s privacy policy promises “we do not share your information with advertisers without your consent” (section 5). Then, in section 7, Facebook lists eleven specific circumstances in which it may share information with others — but none of these circumstances applies to the transmission detailed above.

Facebook’s recent blog postings also deny that Facebook shares users’ identities with advertisers. In an April 6, 2010 post, Facebook promised: “We don’t share your information with advertisers unless you tell us to (e.g. to get a sample, hear more, or enter a contest). Any assertion to the contrary is false. Period.” Facebook’s prior postings were similar. July 1, 2009: “Facebook does not share personal information with advertisers except under the direction and control of a user. … You can feel confident that Facebook will not share your personal information with advertisers unless and until you want to share that information.” December 9, 2009: “Facebook never shares personal information with advertisers except under your direction and control.” As to all these claims, I disagree. Sharing a username or user ID upon a single click, without any disclosure or indication that such information will be shared, is not at a user’s direction and control.

Facebook Has Been on Notice of This Problem for Eight Months

AT&T Labs researcher Balachander Krishnamurthy and Worcester Polytechnic Instituteprofessor Craig Wills previously identified the general problem of social networks leaking user information to advertisers, including leakage through the Referer headers detailed above. In August 2009, their On the Leakage of Personally Identifiable Information Via Online Social Networks was posted to the web and presented at the Workshop on Online Social Networks (WOSN).

Through Krishnamurthy and Wills’ research, Facebook eight months ago received actual notice of the data leakage at issue. A September 2009 MediaPost article confirms Facebook’s knowledge through it spokesperson’s response. However, Facebook spokesperson Simon Axten severely understated the severity of the data leak: Axten commented “The average Facebook user views a number of different profile pages over the course of a session …. It’s thus difficult for a tracking website to know whether the identifier belongs to the person being tracked, or whether it instead belongs to a friend or someone else whose profile that person is viewing.” I emphatically disagree. As shown above, when a user views her own profile, or a page linked from her own profile, the “?ref=profile” tag is added to the URL — exactly confirming the identity of the profile owner.

Since receiving actual notice of these data leaks, Facebook has implemented scores of new features for advertising, monetization, information-sharing, and reorganization. Inexplicably, Facebook has failed to address leakage of user information to advertisers. That’s ill-advised and short-sighted: Users don’t expect ad clicks to reveal their names and details, and Facebook’s privacy policy and blog posts promise to honor that expectation. So Facebook needs to adjust its actual practices to meet its promises.

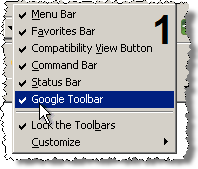

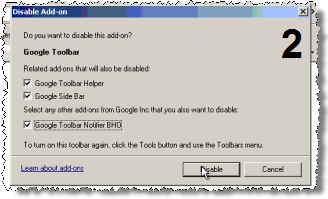

Preventing advertisers from receiving usernames and user IDs is strikingly straightforward: A modified redirect can mask referring URLs. Currently, Facebook uses a simple HTTP 301 redirect, which preserves referring URLs — exactly creating the problem detailed above. But a FORM POST redirect, META REFRESH redirect, or JavaScript redirect could conceal referring URLs — preventing advertisers from receiving username or user ID information.

Instead, Facebook has partially implemented the pound sign method described above — putting some, but not all, sensitive information after a pound sign, with the result that sometimes this information is not transmitted as a Referer. If fully implemented across the Facebook site, this approach might prevent the data leakage I uncovered. However, in my testing, numerous within-Facebook links bypass the pound sign masking. In any event, an improved redirect would be much simpler to implement — requiring only a single adjustment to the ad click-redirect script, rather than requiring changes to URL formats across the Facebook site.

Finally, Facebook should inform users of what has occurred. Facebook should apologize to users, explain why it didn’t live up to its explicit privacy commitments, and establish procedures — at least robust testing, if not full external review — to assure that users’ privacy is correctly protected in the future.

On May 20, 2010, the Wall Street Journal reported the problem detailed above. On or about that same day, Facebook removed the ref=profile tags that were the crux of the data leak.

I yesterday spoke with Arturo Bejar, a Facebook engineer who investigated this problem. Arturo told me that after Krishnamurthy and Wills’ article, he reviewed relevant Facebook systems in search of leakage of user information. At that time, he found none, in that Facebook revealed the URLs users were browsing when they clicked ads, but did not indicate whether the user clicking a given ad was in fact the owner of the profile showing that ad. However, in a subsequent Facebook redesign, beginning in February 2010, Facebook user home pages received a new “profile” button which carried the ref=profile URL tags I analyze above. Because this tag was added without a further privacy review, Arturo tells me that he and others at Facebook did not consider the interaction between this tag and the problem I describe above. Arturo says that’s why this problem occurred despite the prior Krishnamurthy and Wills article.

Arturo also pointed out that the problem I describe did not affect advertisers whose landing pages were pages on Facebook (rather than advertisers’ own external sites).

Meanwhile, Facebook’s May 24 “Protecting Privacy with Referrers” presents Facebook’s view of the problem in greater detail. Facebook’s posting offers a fine analysis of the various methods of redirects and Facebook’s choice among them. It’s worth a read.

After discussing the problem with Arturo and reading Facebook’s new post, I reached a more favorable impression of Facebook’s response. But my view is tempered by Facebook’s ill-advised attempts to downplay the breach.

- Rather than affirmatively describing the specific design flaw, Facebook’s post describes what “could” “potentially” occur. Facebook’s post never gives a clear affirmative statement of the problem.

- Facebook says advertisers would need to “infer” a user’s username/ID. But usernames and IDs are sent directly, in clear and unambiguous URLs, hardly requiring complex analysis

- Facebook claims that the breach affected only “one case … if a user takes a specific route on the site” (WSJ quote). Facebook also calls the problem “a rarely occurring case” (posting). I dispute these characterizations. It is hardly “rare” for a user to view her own profile. To view her own profile and click an ad? There’s no reason to think that’s any less frequent than clicking an ad elsewhere. To view her own profile, click through to another page, and then click an ad? That’s perfectly standard. Furthermore, although Facebook told the Journal there is “one case” in which data is leaked improperly, in fact I’ve found many such cases including clicking from profile to ad, from profile to friend’s page to ad, and from profile to photo page to ad, to name three.

- Through transmission in HTTP Referer headers, usernames and IDs appears reach advertisers’ web servers in a manner such that default server log files would store this data indefinitely, and default analytics would tabulate it accordingly. Facebook says it has “no reason to believe that any advertisers were exploiting” the data breach I reported, but the fact is, this data ends up in a place where advertisers could (and, as to historic data, still can) access it easily, using standard tools, and at their convenience.

- Although Facebook’s post says the problem is “potential,” I found that a user’s username/ID is sent with each and every click in the affected circumstances.

So the problem was substantial, real, and immediate. Facebook errs in suggesting the contrary.

Three years ago, I posted

Three years ago, I posted