I recently examined software from Coupons.com. At first glance their approach seems quite handy. Who could oppose free coupons? But a deeper look reveals troubling behaviors I can’t endorse. This piece summarizes my key concerns:

- Installing with deceptive filenames and registry entries that hinder users’ efforts to fully remove Coupons’ software. Details.

- Failing to remove all Coupons.com components upon a user’s specific request. Details.

- Assigning each user an ID number, and placing this ID onto each printed coupon, without any meaningful disclosure. Details.

- Allowing third-party web sites to retrieve users’ ID numbers, in violation of Coupons.com’s privacy policy. Details.

- Allowing any person to check whether a given user has printed a given coupon, in violation of Coupons.com’s privacy policy. Details.

The Coupons.com business

Coupons.com offers users coupons which they can print at home, then redeem at retailers.

Coupons.com specifically promises users that they may "use as many [coupons] as [they] like." But in fact, Coupons.com takes great pains to limit how many coupons users can print. Rather than simply letting users print GIF or JPG coupons from an ordinary web page, Coupons.com requires that users install a coupon-printing ActiveX control. Coupons.com also customizes each coupon with information about who printed it and when. These design decisions increase the complexity of Coupons.com’s business — giving rise to the serious consent and privacy issues set out below.

Installing with deceptive filenames and registry entries

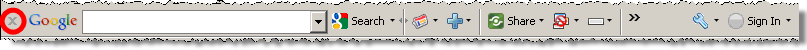

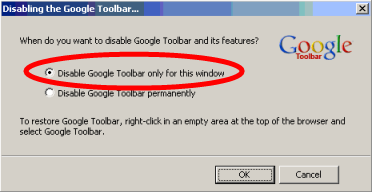

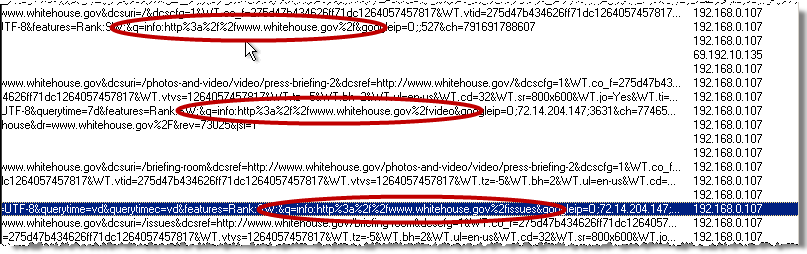

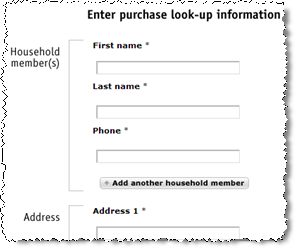

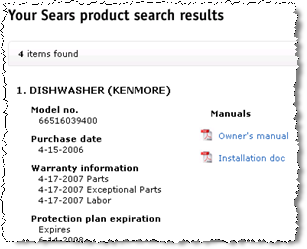

On an ordinary test PC that had never previously run any software from Coupons.com, I installed Coupons.com’s Coupon Bar 5.0 software. I requested a coupon to be printed, then ran an "InCtrl" comparison of changes made to my computer. InCtrl revealed the following new files and registry entries:

c:\windows\uccspecc.sys

c:\windows\WindowsShellOld.Manifest.1

HKEY_LOCAL_MACHINE\SOFTWARE\ClassesManifest.Template.1

HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\Windows\CurrentVersion\uccspecc

HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\Windows\CurrentVersion\Controls Folder\Presentation Style

HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\Windows\CurrentVersion\Explorer\EnableAutoTrayHistory

HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\Windows\CurrentVersion\Internet Settings\URLDecoding

Each of these entries consisted of a 30 to 90-letter string of gibberish. For example, the contents of uccspecc.sys exactly matched the contents of the first three registry entries: HtmWSrewvuaCGtKrVlXxMKdbMkLfgHq.

Others have also noticed these oddly-named files. For example, McAfee SiteAdvisor reports every file and registry entry Coupons.com creates.

These Coupons.com filenames and registry keys are deceptive, for at least three different reasons.

1) The labels falsely suggest that the components are part of Windows, rather than third-party add-ins. For example, the files and registry keys are placed in locations reserved for Windows itself, not for third-party applications. Furthermore, Coupons.com’s choice of filename and registry keys affirmatively misrepresents the function of the specified components.

2)The labels falsely suggest that the components are system files. For example, the .SYS file extension has a special meaning to Windows (e.g. for device drivers and other system components), but the Coupons.com file serves no such "system" function. Registry keys as to (supposed) Explorer AutoTray, URL encoding, and folder presentation settings all suggest intuitive meanings. But Coupons.com goes on to use these keys for a purpose unrelated to their names.

3) The labels are confusingly similar to genuine Windows components. For example, WindowsShell.Manifest is a bona fide Windows file, but Coupons.com’s "WindowsShellOld.manifest.1" (emphasis added) has no relationship whatsoever with that file (and is certainly not an "old" version of that file). Similarly, the HKLM\Software\Microsoft\Windows\CurrentVersion\Internet Settings\URLEncoding registry key is required by Internet Explorer, making Coupons’ choice of the similar URLDecoding (emphasis added) especially likely to confuse typical users.

Coupons.com’s choice of registry keys and filenames has a clear purpose and effect: To deter users from deleting the specified keys and files. Even among users sophisticated enough to manually delete unwanted files and registry keys, the chosen registry keys and filenames look so official that removal appears unwise. The typical result is that users will elect to retain these files, mistakenly concluding that these files are part of Windows.

Coupons.com’s deceptive filenames flout industry norms. For example, the Anti-Spyware Coalition’s Best Practices invite anti-spyware vendors to consider whether a program’s “files have easy-to-understand names and are easy for users to find on their computers” — a test Coupons.com clearly fails. Anti-spyware statutes in Texas and Arkansas specifically prohibit deceptively-named files and registry entries that prevent users from removing software, and TRUSTe Trusted Download rules (which bind Coupons.com as a Trusted Download sealholder) also prohibit deceptive naming to avoid removal. These Texas, Arkansas, and TRUSTe requirements admittedly limit their prohibitions to deceptively-named "software" and to deception that hinders program removal. Perhaps Coupons.com manages to escape these rules by deceptively naming its configuration files (rather than its executable code) or by making its executable code (though not its configuration files) easy to remove. Nonetheless, these authorities reveal the public’s discomfort with deceptive naming. If users are to know what is on their computers and why, vendors must name their files in a way reasonable users can understand. Yet Coupons.com intentionally does exactly the opposite..

Failing to remove all Coupons.com components when users request uninstall

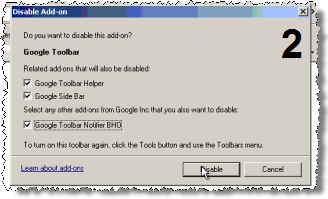

On my test PC, I attempted to uninstall the Coupons.com software in the usual way: Control Panel – Add/Remove Programs – Coupon Printer. The uninstaller claimed to have run successfully. Yet my computer retained the two files and five registry entries set out in the prior section. These files and registry entries remained even after I restarted my test PC.

I had requested an "uninstall" of Coupons.com software — not a partial uninstall, but (for lack of any instruction or indication to the contrary) a complete uninstall. The Coupons.com uninstaller had even paused to ask about a specific "shared system file" it wanted special confirmation to delete — further suggesting a thorough removal procedure. The uninstaller ultimately reported that the uninstall was "successful." Nonetheless, the specified components were left on my computer after uninstall.

Coupons.com’s privacy policy fails to disclose that these files and registry keys — embodiments of a user’s ID number, as explained in subsequent sections — are left behind even after uninstall. The privacy policy discusses cookies (a more common way to store user information) in a full paragraph, including three sentences about "persistent cookies" and how users can remove them. The privacy policy therefore seems to cover all user information that Coupons.com stores on users’ PCs. Yet the privacy policy is entirely silent as to the files and registry entries set out above, and as to their retention even after a user attempts to remove Coupons.com software. Neither does Coupons.com’s software license agreement mention these hidden files — neither their existence nor their retention.

The TRUSTe Trusted Download certification agreement requires "an easy and intuitive means of uninstallation" (provision 7). TRUSTe instructs that uninstallation "must remove the Certified Software from the User’s computer." TRUSTe does not specifically speak to the possibility of a program leaving data files behind after uninstall. But where TRUSTe offers an exception to the requirement of complete removal, that exception is tightly limited to serving a user’s direct and immediate interest. (Namely, TRUSTe allows a program to leave behind a shared component that other programs also rely on, since removing that component would disable the other programs.) Furthermore, TRUSTe’s requirement summary demands that "[u]ninstallation must remove all software associated with the particular application" (emphasis added) — broad language suggesting little tolerance for files intentionally left behind. Since TRUSTe offers only a single exception to the requirement of complete removal, and since that exception is so narrow, I believe TRUSTe will likely take a dim view of certified software intentionally failing to uninstall any of its components.

Printing users’ ID numbers onto coupons

Coupons.com Prints a User’s ID Number on Each Coupon

Coupons.com Prints a User’s ID Number on Each Coupon

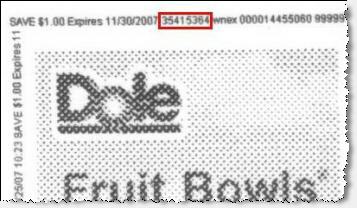

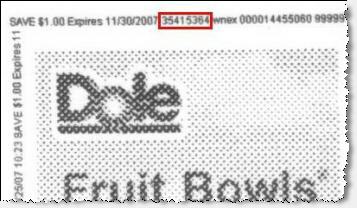

Every coupon printed from Coupons.com bears a series of small numbers. These numbers include the user ID of the user who printed the coupon. See an example coupon printed from my computer, repeatedly reporting my user ID: 35415364.

Coupons.com’s privacy policy does not prominently warn users that Coupons.com will include their user IDs on each printed coupon. As best I can tell, after multiple careful readings of the privacy policy, the only relevant provision is as follows:

Coupons, Inc. discloses "automatically collected" data (such as coupon print and redeem activity) to its Clients and third-party ad servers and advertisers. These third parties may match this data with information that they have previously collected about you under their own privacy policies, which you should consult on a regular basis.

I believe Coupons.com considers user ID numbers to be "automatically collected data," and Coupons.com seems to use the word "Clients" to include product manufacturers as well as retail merchants. On such an interpretation, the quoted language might let Coupons.com print user ID numbers on coupons that are given to retailers and ultimately to merchants. But even if consumers read the quoted language, most consumers will be unable to figure out what it means because the wording is so convoluted and vague.

In lieu of the quoted wording, Coupons.com could simply explain: "We include your user ID on each coupon you print." Such a warning would be clear, concise, and easy to understand. But such a warning would also raise privacy concerns for typical users — perhaps one reason why Coupons.com might prefer more complicated wording.

Allowing third-party web sites to retrieve user ID numbers, in violation of Coupons.com’s privacy policy

Coupons.com Allows Third-Party Sites to Retrieve User ID Numbers

Coupons.com Allows Third-Party Sites to Retrieve User ID Numbers

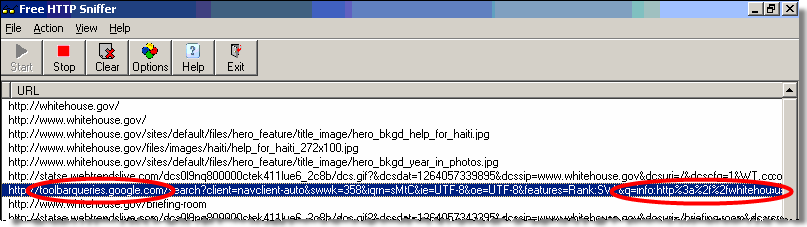

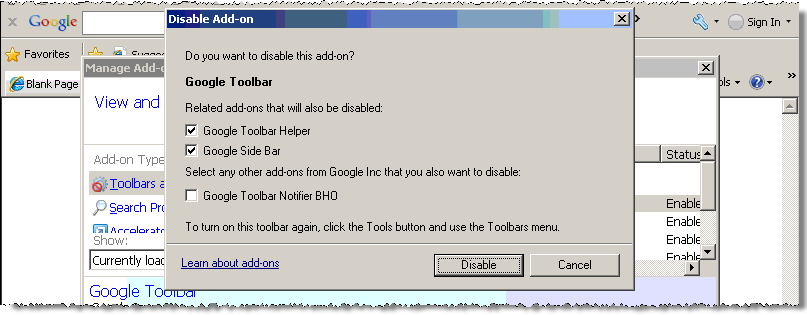

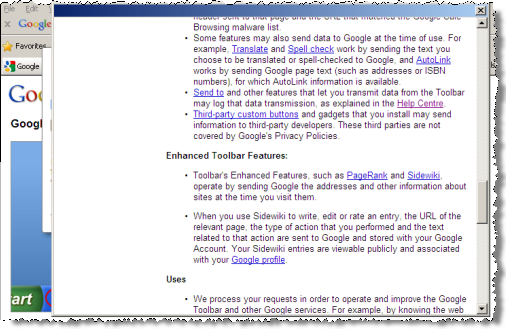

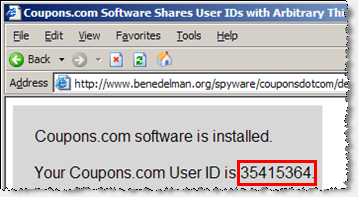

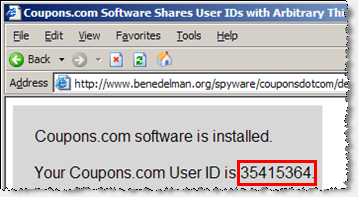

Examining JavaScript code on Coupons.com’s web site, I noticed an apparent design flaw. Testing confirmed my suspicion: Any web page can invoke the "GetDeviceID" method of Coupons.com’s coupon-printing software. The web page then receives the user ID associated with the user’s installation of Coupons.com software.

To confirm this data leakage, see my Coupons.com Software Shares User IDs with Arbitrary Third Parties testing page. If a computer runs current Coupons.com software, this page will display the associated Coupons.com user ID. (However, no information is sent to my web server or otherwise stored or preserved.) This is the exact same ID number that is printed onto users’ coupons. (Screenshot.)

Although Coupons.com user ID numbers appear to be assigned arbitrarily, distribution of these ID numbers raises at least three privacy concerns:

1) This distribution is not permitted under Coupons.com’s privacy policy. Coupons.com’s privacy policy specifically limits the circumstances in which Coupons.com will share user information, and this is not among the circumstances users accept. In particular, Coupons.com says it will disclose certain information to "clients and third-party ad servers and advertisers." But in fact, Coupons.com’s program code makes user IDs available to anyone — even to sites with absolutely no relationship to Coupons.com.

2) Coupons.com user IDs are widespread. As explained in the prior section, a user’s ID is printed onto each coupon the user prints. Broad distribution of user IDs increases the unpredictable consequences of further sharing of ID numbers. For example, a merchant’s web site could cross-check users’ computers against coupons — conceivably even connecting users’ computers back to users’ retail purchase histories. Retailers could similarly use Coupons.com ID numbers to connect a user’s online activity to the user’s in-store shopping habits.

3) Coupons.com user IDs are persistent. Unless a user carefully removes the filenames and registry entries set out in the preceding section, uninstalling and reinstalling Coupons.com software will retain the same user ID. A Coupons.com user ID is therefore highly likely to continue to identify the same user over time. In contrast, other identifiers tend to change over time. For example, many ISPs reassign user IP addresses often. Some users their cookies in an attempt to increase their online privacy. Because Coupons.com user IDs are unusually hard to remove, Coupons.com user IDs are a particularly effective way for sites to track users over an extended period.

This violation of Coupons.com’s privacy policy occurred despite Coupons.com’s membership in the TRUSTe Web Privacy Seal Program, the TRUSTe Trusted Download Program, and the BBBOnLine Reliability Program. Knowing that Coupons.com software assigns each user an ID number and that Coupons.com accesses these ID numbers through its web site, the prospect of leakage to other web sites (in specific violation of Coupons.com’s privacy policy) was obvious and intuitive. Yet it seems TRUSTe and BBBOnLine failed to check for this possibility. This failure is particularly disappointing since TRUSTe’s Trusted Download program claims to specialize in software testing.

Allowing any person to check whether a given user has printed a given coupon

Coupons.com Confirms that a Given User Has Printed a Given Coupon

Coupons.com Confirms that a Given User Has Printed a Given Coupon

Coupons.com Reports that a User Has Not Printed a Given Coupon

Coupons.com Reports that a User Has Not Printed a Given Coupon

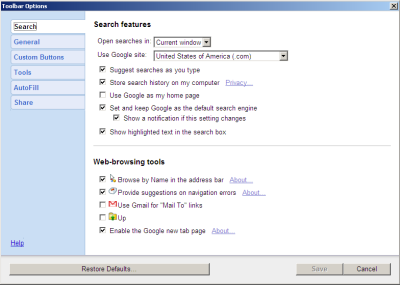

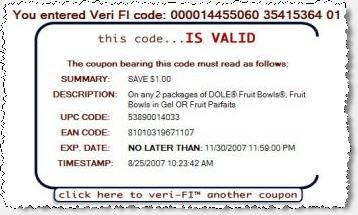

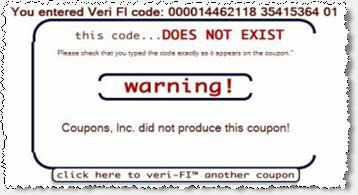

Coupons.com’s Veri-fi service, veri-fi.com, lets any interested person determine whether the coupon is (in Coupons.com’s view) "counterfeit [or] fraudulently-altered." But this same mechanism also lets any person check whether a given Coupons.com user (identified only by the user’s Coupons.com user ID) has printed a given coupon — potentially revealing significant information about the user’s purchasing interests.

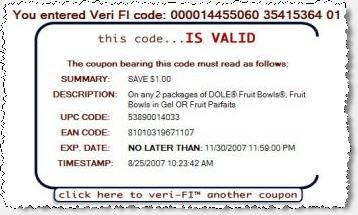

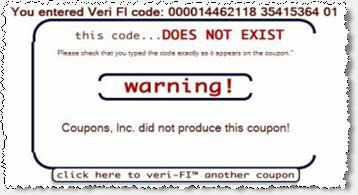

To confirm the effect of Coupons.com’s Veri-fi service, I entered the codes from the example coupon shown above. I received the first confirmation shown at right — indicating that the specified user ID (me) had printed the specified coupon.

I then entered the same user ID, but a different coupon code. In particular, I chose a coupon code associated with a valid Coupons.com coupon that I had never printed using the specified user ID. As shown in the second screenshot at right, Veri-fi reported that this second code was invalid. That is, Veri-fi reported that the specified user ID had never printed the specified coupon.

Veri-fi seems to work just as Coupons.com intended. However, combining the Veri-fi verification system with the widespread distribution of Coupons.com user IDs (both in print and through JavaScript), Coupons.com reveals detailed information about which users have requested which coupons. Via the JavaScript interface, a web site can easily extract a user’s Coupons.com user ID. Then, via Veri-fi, the web site can check which coupons the user has printed. The web site can thereby build a rich profile of the user’s purchasing interests — despite the promise in Coupons.com’s privacy policy that such information would be distributed only to Coupons.com’s clients, ad servers, and advertisers.

Strikingly, Coupons.com fails to limit Veri-fi to bona fide coupon validators (e.g. retailers and manufacturers). In fact, Veri-fi lacks even a Terms of Service document or a license agreement to attempt to limit who uses the site.

Update (August 28, 2007 – 3:35pm): Coupons.com has contacted me to report that the Veri-fi site no longer allows the data retrieval described above.

Implications & Consequences

A user visiting Coupons.com reasonably expects to get free coupons. Unfortunately, Coupons.com’s practices far exceed anything described in marketing materials, EULA, or privacy policy. Would users join Coupons.com if they knew they had to receive deceptively-named files? That uninstall would leave files behind for possible use later? That every printout would carry a user ID that could be linked to a user’s full coupon-printing history? That Coupons.com’s software and web site would distribute user information in ways even Coupons.com probably didn’t anticipate? We can’t know the answers to these questions because Coupons.com never gave users the opportunity to decide. But with full disclosure, users might well choose to get their coupons elsewhere.

Coupons.com prominently touts its certifications from TRUSTe (including TRUSTe’s new Trusted Download Program) and BBBOnLine. But when these organizations learn of Coupons.com’s specific practices, I doubt they’ll be impressed. Coupons.com’s practices are in tension with various TRUSTe rules, including a Trusted Download prohibition on certain deceptive filenames and registry keys, as well as TRUSTe’s general prohibition on privacy policy violations. More generally, it’s hard to call a program "trusted" when it uses deceptive names to hide some of its key files, when it fails to remove itself fully upon a user’s specific request, and when it makes available users’ identifying information despite privacy policy promises to the contrary. Retaining the credibility of Trusted Download probably requires that TRUSTe take action either to correct Coupons.com’s practices or to sever TRUSTe’s ties to Coupons.com.

Coupons.com could easily fix some of these bad practices. A new version of Coupons.com’s software could prevent arbitrary web sites from retrieving user ID numbers. Coupons.com could stop printing users’ ID numbers on each coupon, or could prominently tell users that each coupon bears a user ID. Coupons.com could limit Veri-fi access to retailers and manufacturers.

With effort, Coupons.com could track users’ coupon-printing without underhanded tactics like deceptive files and registry entries. For one, Coupons.com could label its files and registry keys appropriately — treating its users with dignity and respect, rather than assuming users will try to cheat. Alternatively, Coupons.com could use recognize computers on which it has previously been installed, without resorting to deceptive files or registry entries. (Direct Revenue built such a system — checking a user’s ethernet address, Windows product key, etc. in order to identify repeat installations.) Simpler yet, Coupons.com could request users’ email addresses, and use duplicate addresses to recognize repeat users. Coupons.com may worry that email addresses offer inadequate security, but eBay (Paypal), Google, and others have used this method even for larger offers (as large as $5 – $10).

Coupons.com’s practices fit the historical problems with digital rights management (DRM) software that attempts to constrain what users can do with their own computers. Compare Coupons.com’s approach to the notorious Sony CDs which used a rootkit to conceal Sony’s DRM software. Just as Sony had to rely on a rootkit to hide its DRM software from users who otherwise would have chosen to remove it, Coupons.com hides user IDs in obscure files and registry keys. Just as Sony’s disclosures were less than forthright, so too does Coupons.com fail to tell users what it is doing and how. Based on their examination of software to constrain access to digital music, Ed Felten and Alex Halderman previously explained the core problem: So long as users don’t want a given piece of code on their computers, vendors are forced to conceal their efforts to put it there and to keep it there. As to Coupons.com, some users do want the core functionality. But tracking users after uninstall is sufficiently noxious that Coupons.com knows it must cover its tracks lest users notice. Coupons.com thus finds itself in the same DRM predicament that ensnared Sony.

FullContext Spyware Injects Coupons.com Ads Into Google

FullContext Spyware Injects Coupons.com Ads Into Google

Coupons.com is currently suing John Stottlemire, who Coupons.com claims told users "how to beat the limitation imposed by the software provided by coupons.com." (Complaint paragraph 20.) Coupons.com alleges that Stottlemire "created and used software that purported to remove Plaintiff’s security features, for the purpose of printing more coupons than [Coupons.com’s] security features allow." (Paragraph 21) Coupons.com claims that Stottlemire’s practices violate the Digital Millennium Copyright Act, among other causes of action. I can’t speak to the merits of Coupons.com’s claim. But perhaps Coupons.com would do better to focus on protecting users’ privacy and on complying with its privacy policy.

Coupons.com’s behaviors are particularly notable because they extend to multiple coupon-printing programs distributed by literally thousands of web sites. Although I primarily tested Coupons.com’s Coupon Bar software (version 5.0), it seems Coupons.com’s Coupon Printer 4.1 shares all relevant characteristics. (These are the two Coupons.com programs that have been certified by TRUSTe’s Trusted Download.) In addition to distribution at the Coupons.com web site, these programs are also offered by numerous partner sites. Coupons.com’s marketing materials claim more than 1500 such sites, including the LA Times, Washington Times, and Philly.com.

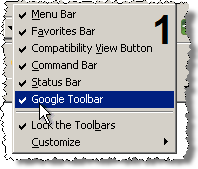

Coupons.com’s online advertising strategy raises trust and privacy questions similar to those presented by Coupons.com’s coupon-printing software. Twice this year I’ve seen and recorded Coupons.com ads shown through spyware: First through the FullContext ad injector (which put Coupons.com ads into the top of Google.com, above Google’s logo), and later through Targetsaver full-screen pop-up ads. Screenshots. Both programs are widespread and known for installing without consent, among other anti-consumer practices. To be a respected player in the online advertising economy, Coupons.com must do more to avoid funding these spyware vendors and the unsavory ecosystem they represent.

Update on Coupons.com’s Response, My Critique, and TRUSTe’s Decision (September 23, 2007)

After I posted the article above, Coupons.com circulated a two-page response. Among other claims, the response argues that the specified registry keys and filenames are "not deceptive." I emphatically disagree. The components are intentionally named to look like they’re part of Windows, and they’re placed in locations where Windows components ordinarily appear. These practices are exactly intended to mislead users as to the components’ purpose. That’s the essence of deception.

Coupons further claims the "user ID" I describe is in fact a "device ID." As a threshhold matter, that would change none of my analysis. But the word "user" comes directly from Coupons.com’s own source code. Coupons’ JavaScript code references a function called "GetUserCode()" (emphasis added) and passes a OBJECT PARAM tag with value USERID (emphasis added). Elsewhere, Coupons.com uses the abbreviation "txtUID" — i.e. a text field storing a user ID. With these repeated "user" references appearing in code written by Coupons.com, Coupons.com cannot credibly claim I err in my use of that same term.

Coupons.com goes on to argue it ought not be responsible for third parties using Coupons.com’s software to obtain user IDs. Coupons says "it is hard to imagine how a third party’s unauthorized use of our software — a sort of trespass … — constitutes our violation of our own privacy policy." I disagree. Perhaps Coupons.com should begin by rereading its privacy policy. Within the heading "our commitment to data security," Coupons.com specifically promises to "use[] commercially reasonable physical, managerial, and technical safeguards to preserve the integrity and security of your personal information." Coupons.com cannot in good conscience claim it is a "commercially reasonable" "technical safeguard" to allow any web site to invoke a simple JavaScript method of Coupons.com’s software. Quite the contrary, this is poor design — falling far short of industry norms for data protection.

Meanwhile, Coupons.com has updated its installer to show a new license agreement. If a user scrolls to the second screen of the license agreement, the user is told of "License Keys" to be placed on the user’s computer. To Coupons.com’s credit, the installer now discloses that these files will not be removed upon uninstall. But the files continue to be placed in deceptive locations in the user’s Windows directory and in the dedicated Windows section of the user’s registry — a fact nowhere disclosed to users.

On August 31, I filed a watchdog complaint with TRUSTe as to the Coupons.com practices set out above. In response, TRUSTe told me it will require Coupons.com to change its naming system "to avoid looking like … other popular software" (i.e. Windows). TRUSTe will also require Coupons.com to offer a new version of its software that removes deceptive files and registry entries leftover from prior versions. (TRUSTe’s blog describes these same requirements, albeit in terms less stark than the email TRUSTe sent me.) These are certainly steps in the right direction. Were the decision mine to make, I doubt I’d keep Coupons.com on the Trusted Download whitelist during a period when the company’s practices are known to fall short. But that’s a topic for another day.

Meanwhile, Coupons.com continues litigation against John Stottlemire. I’ve been in touch with John. I’ve learned that his software — which would have removed Coupons.com’s deceptive files and registry entries upon a user’s specific request — was actually never distributed to anyone but Coupons.com. (John’s web server detected Coupons.com’s IP addresses and granted them access, even before John was prepared to make the software available to anyone else.) This fact leaves me all the more doubtful of Coupons.com’s litigation strategy. John’s software was never used by even a single user. And John’s software would have done nothing more than remove the deceptively-named components TRUSTe is now ordering Coupons.com to remove itself. I remain hopeful that Coupons.com will withdraw this ill-fated attempt to silence a critic. Pending that, I’ve added John’s plight to my spyware threats page.

Finally, Coupons.com’s sneaky tactics continue to undermine its standing in the security community. Some top anti-spyware programs now detect Coupons.com — and rightly so, in my view. Users with Coupons.com software deserve extra information — not forthcoming from Coupons.com — about what the software does and why users might not want it.